In this tutorial, we’ll look at how to train a YOLO model for object recognition on specific data. The difficulty lies in creating the image bank that will be used for training.

Hardware

- A computer with a Python3 installation

- A camera

Principle

We saw in a previous tutorial how to recognize objects with Yolo. This model has been trained to detect a certain number of objects, but this list is limited.

{0: 'person', 1: 'bicycle', 2: 'car', 3: 'motorcycle', 4: 'airplane', 5: 'bus', 6: 'train', 7: 'truck', 8: 'boat', 9: 'traffic light', 10: 'fire hydrant', 11: 'stop sign', 12: 'parking meter', 13: 'bench', 14: 'bird', 15: 'cat', 16: 'dog', 17: 'horse', 18: 'sheep', 19: 'cow', 20: 'elephant', 21: 'bear', 22: 'zebra', 23: 'giraffe', 24: 'backpack', 25: 'umbrella', 26: 'handbag', 27: 'tie', 28: 'suitcase', 29: 'frisbee', 30: 'skis', 31: 'snowboard', 32: 'sports ball', 33: 'kite', 34: 'baseball bat', 35: 'baseball glove', 36: 'skateboard', 37: 'surfboard', 38: 'tennis racket', 39: 'bottle', 40: 'wine glass', 41: 'cup', 42: 'fork', 43: 'knife', 44: 'spoon', 45: 'bowl', 46: 'banana', 47: 'apple', 48: 'sandwich', 49: 'orange', 50: 'broccoli', 51: 'carrot', 52: 'hot dog', 53: 'pizza', 54: 'donut', 55: 'cake', 56: 'chair', 57: 'couch', 58: 'potted plant', 59: 'bed', 60: 'dining table', 61: 'toilet', 62: 'tv', 63: 'laptop', 64: 'mouse', 65: 'remote', 66: 'keyboard', 67: 'cell phone', 68: 'microwave', 69: 'oven', 70: 'toaster', 71: 'sink', 72: 'refrigerator', 73: 'book', 74: 'clock', 75: 'vase', 76: 'scissors', 77: 'teddy bear', 78: 'hair drier', 79: 'toothbrush'}

The model can be trained to recognize additional objects or other objects using a suitable image bank.

Python configuration

If not, you can download and install Python 3

You can then install the necessary libraries imutils, OpenCV, ultralytics

python3 -m pip install imutils opencv-python ultralytics

Data configuration

Once you’ve created an image database with labels and boxes in Yolo format, place the database in the YOLO\datasets folder. You can then summarize the information in a YAML file in which you specify:

- the path to the database contained in datasets (coffe_mug)

path: coffee_mug/ train: 'train/' val: 'test/' # class names names: 0: 'mug'

N.B.: you can pass paths as image directories, text files (path

# train and val data as 1) directory: path/images/, 2) file: path/images.txt, or 3) list: [path1/images/, path2/images/]

train: [./coco128/images/train2017/, coffee_mug/test/]

val: [./coco128/images/train2017/, coffee_mug/train/]

# class names

names: ['person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light',

'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee',

'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard',

'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple',

'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch',

'potted plant', 'bed', 'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone',

'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors', 'teddy bear',

'hair drier', 'toothbrush', 'mug']

Retrieving a pre-trained model

It is possible to retrieve a pre-trained model from the python script that will serve as the basis for training the new model.

# load the pre-trained YOLOv8n model

model = YOLO("yolov8n.pt")

N.B.: take a close look at the model that corresponds to your machine and your needs, as they have different performance levels.

Python script for Yolo training

Once your image bank is ready, the training script is quite simple. Just specify:

- new model name (yolov8n_v8_50e)

- number of iterations (epochs)

- the database to be used (data)

- number of files to be used in one iteration (batch)

train_yolo.py

from ultralytics import YOLO

# Load the model.

model = YOLO('yolov8n.pt')

# Training.

results = model.train(

data='coffee_mug_v8.yaml',

imgsz=1280,

epochs=50,

batch=8,

name='yolov8n_v8_50e'

)

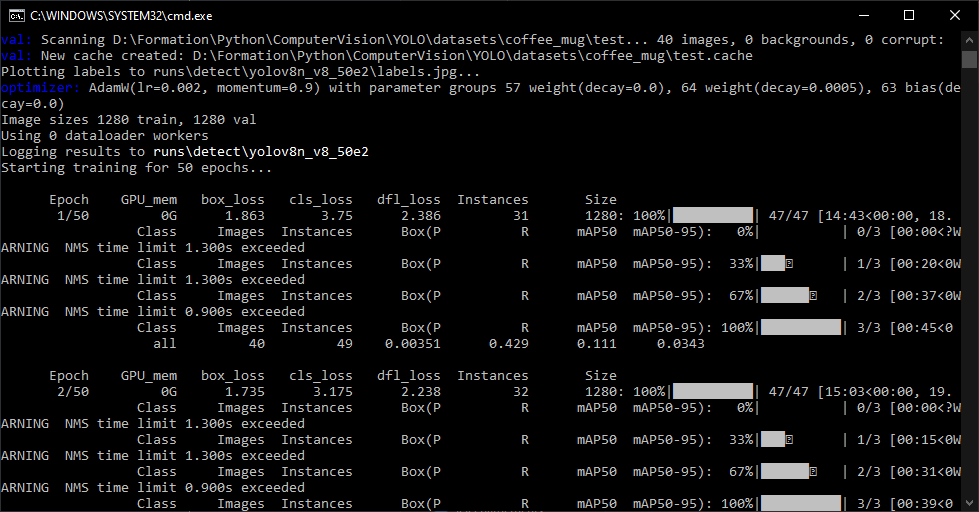

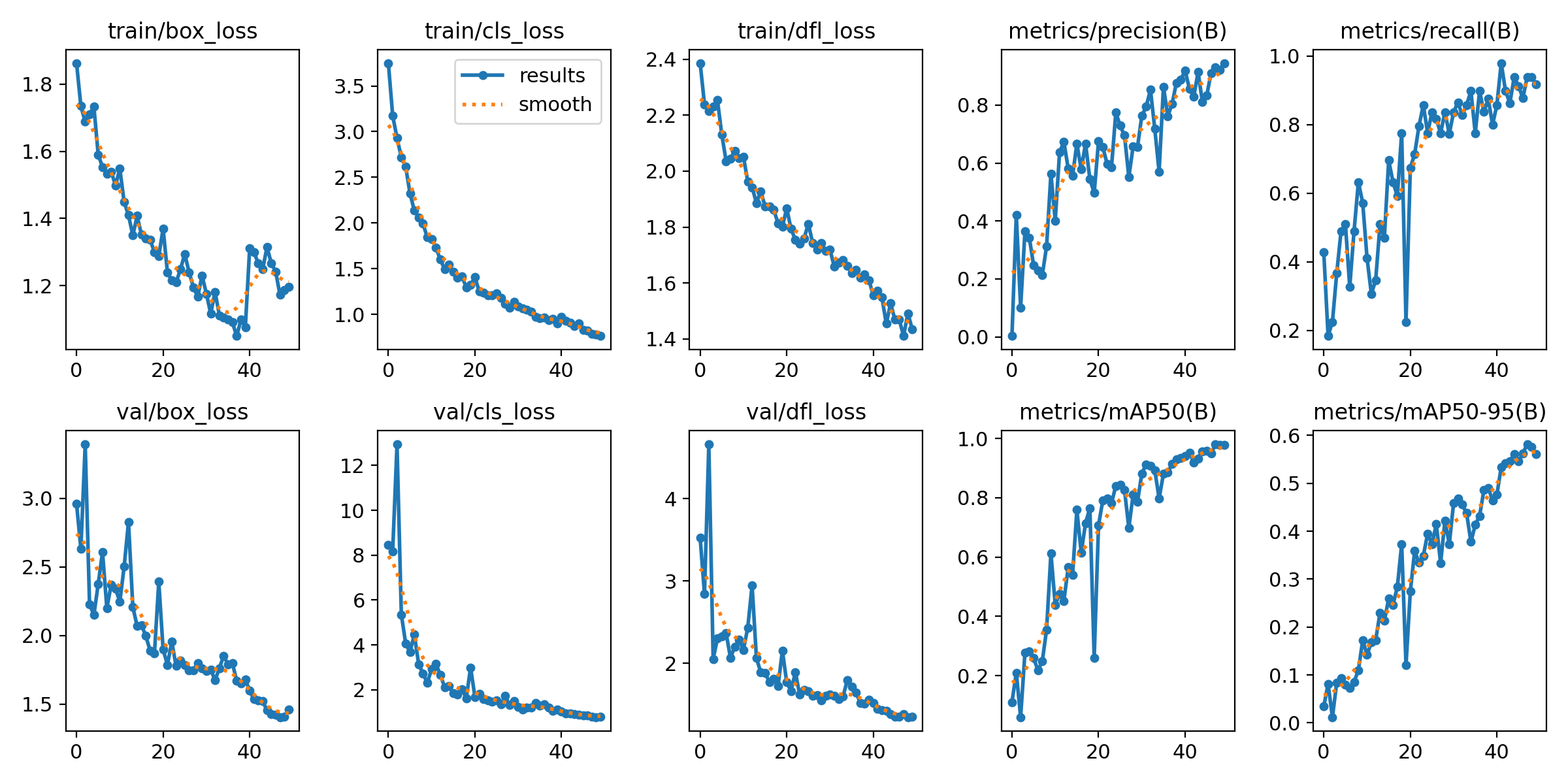

The training algorithm records a certain amount of data during the process, which you can then view to analyze the training. The results can be found in the .\runs\detect\ folder.

Python script for model evaluation

Once the model has been trained, you can compare its performance on new images.

To retrieve the trained model, you can copy it to the root or enter the access path

“./runs/detect/yolov8n_v8_50e2/weights/best.pt”

#!/usr/bin/env python

# -*- coding: utf-8 -*-

#

import datetime

from ultralytics import YOLO

import cv2

from imutils.video import VideoStream

#from helper import create_video_writer

# define some constants

CONFIDENCE_THRESHOLD = 0.65

GREEN = (0, 255, 0)

image_list=['./datasets/coffee_mug/test/10.png','./datasets/coffee_mug/test/19.png']

# load the pre-trained YOLOv8n model

#model = YOLO("yolov8n.pt")

model = YOLO("./runs/detect/yolov8n_v8_50e2/weights/best.pt") # test trained model

for i,img in enumerate(image_list):

#detect on image

frame= cv2.imread(img)#from image file

detections = model(frame)[0]

# loop over the detections

#for data in detections.boxes.data.tolist():

for box in detections.boxes:

#extract the label name

label=model.names.get(box.cls.item())

# extract the confidence (i.e., probability) associated with the detection

data=box.data.tolist()[0]

confidence = data[4]

# filter out weak detections by ensuring the

# confidence is greater than the minimum confidence

if float(confidence) < CONFIDENCE_THRESHOLD:

continue

# if the confidence is greater than the minimum confidence,

# draw the bounding box on the frame

xmin, ymin, xmax, ymax = int(data[0]), int(data[1]), int(data[2]), int(data[3])

cv2.rectangle(frame, (xmin, ymin) , (xmax, ymax), GREEN, 2)

#draw confidence and label

y = ymin - 15 if ymin - 15 > 15 else ymin + 15

cv2.putText(frame, "{} {:.1f}%".format(label,float(confidence*100)), (xmin, y), cv2.FONT_HERSHEY_SIMPLEX, 0.5, GREEN, 2)

# show the frame to our screen

cv2.imshow("Img{}".format(i), frame)

while True:

if cv2.waitKey(1) == ord("q"):

break

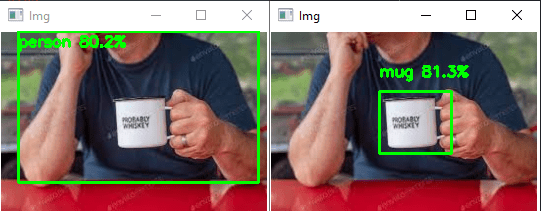

Results

We’ve achieved our goal, with a new model that recognizes mugs ({0: ‘mug’}), only.

You can test this code with your webcam or with photos, for example, to see how the model and object recognition perform.

To enable the model to recognize more types of objects, images of the object in question must be added to the database.