It’s possible to embed object recognition models like Yolo on a Raspberry Pi. Of course, because of its low performance compared with computers, performance is lower in terms of real-time recognition. However, it is perfectly possible to develop algorithms using object recognition for applications that don’t require real-time recognition.

Hardware

- Raspberry Pi 4

- Screen+mouse+keyboard

- SD card with OS Raspbian 64bits

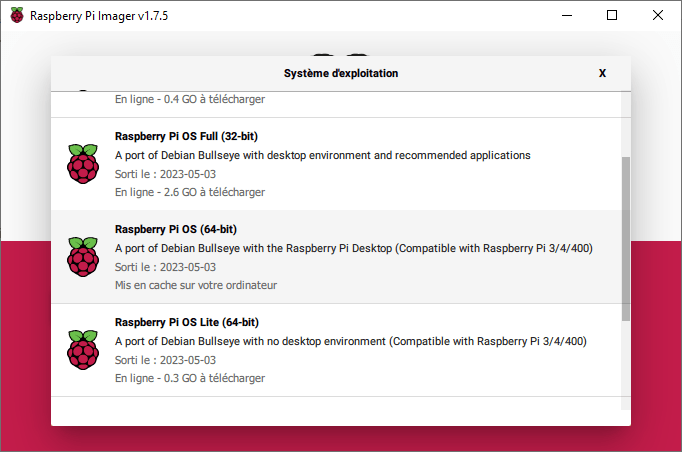

Configuration

To use the Yolo, you’ll need to install the 64-bit version of Raspberry Pi OS. This version is available in the Raspberry Pi Imager software in the Raspberry Pi OS (others) menu.

Compatible Python versions are >=3.9

The libraries to be installed are

pip install numpy imutils opencv-python pip install ultralytics

Object detection code

The code for object detection with Yolo is the same as on a computer

The Yolo model is initialized (the .pt file is downloaded at the start of the program).

model = YOLO("yolov5nu.pt")

Then open a video stream (or a video or image file).

vs = VideoStream(src=0, resolution=(640, 640)).start() #video_cap = cv2.VideoCapture(0)

We then run the model on each image

detections = model(frame)[0]

Finally, we plot the detection results on the image and display them.

#!/usr/bin/env python

# -*- coding: utf-8 -*-

#

import datetime

from ultralytics import YOLO

import cv2

from imutils.video import VideoStream

import screeninfo

# define some constants

CONFIDENCE_THRESHOLD = 0.7

GREEN = (0, 255, 0)

model = YOLO("yolov5nu.pt")

#model = YOLO("./runs/detect/yolov8n_v8_50e2/weights/best.pt") # test trained model

print(model.names)

# initialize the video capture object

vs = VideoStream(src=0, resolution=(640, 640)).start()

#video_cap = cv2.VideoCapture(0)

#video_cap = cv2.VideoCapture("datasets\\Splash - 23011.mp4")

while True:

# start time to compute the fps

start = datetime.datetime.now()

frame = vs.read(); ret=True

#ret, frame = video_cap.read()

# if there are no more frames to process, break out of the loop

if not ret:

break

# run the YOLO model on the frame

detections = model(frame)[0]

# loop over the detections

#for data in detections.boxes.data.tolist():

for box in detections.boxes:

#extract the label name

label=model.names.get(box.cls.item())

# extract the confidence associated with the detection

data=box.data.tolist()[0]

confidence = data[4]

# filter out weak detections

if float(confidence) < CONFIDENCE_THRESHOLD:

continue

# draw the bounding box on the frame

xmin, ymin, xmax, ymax = int(data[0]), int(data[1]), int(data[2]), int(data[3])

cv2.rectangle(frame, (xmin, ymin) , (xmax, ymax), GREEN, 2)

#draw confidence and label

y = ymin - 15 if ymin - 15 > 15 else ymin + 15

cv2.putText(frame, "{} {:.1f}%".format(label,float(confidence*100)), (xmin, y), cv2.FONT_HERSHEY_SIMPLEX, 0.5, GREEN, 2)

# end time to compute the fps

end = datetime.datetime.now()

# show the time it took to process 1 frame

total = (end - start).total_seconds()

print(f"Time to process 1 frame: {total * 1000:.0f} milliseconds")

# calculate the frame per second and draw it on the frame

fps = f"FPS: {1 / total:.2f}"

cv2.putText(frame, fps, (50, 50),

cv2.FONT_HERSHEY_SIMPLEX, 2, (0, 0, 255), 2)

# show the frame to our screen

cv2.imshow("Frame", frame)

#press key Q to exit

if cv2.waitKey(1) == ord("q"):

break

#video_cap.release()

vs.stop()

cv2.destroyAllWindows()

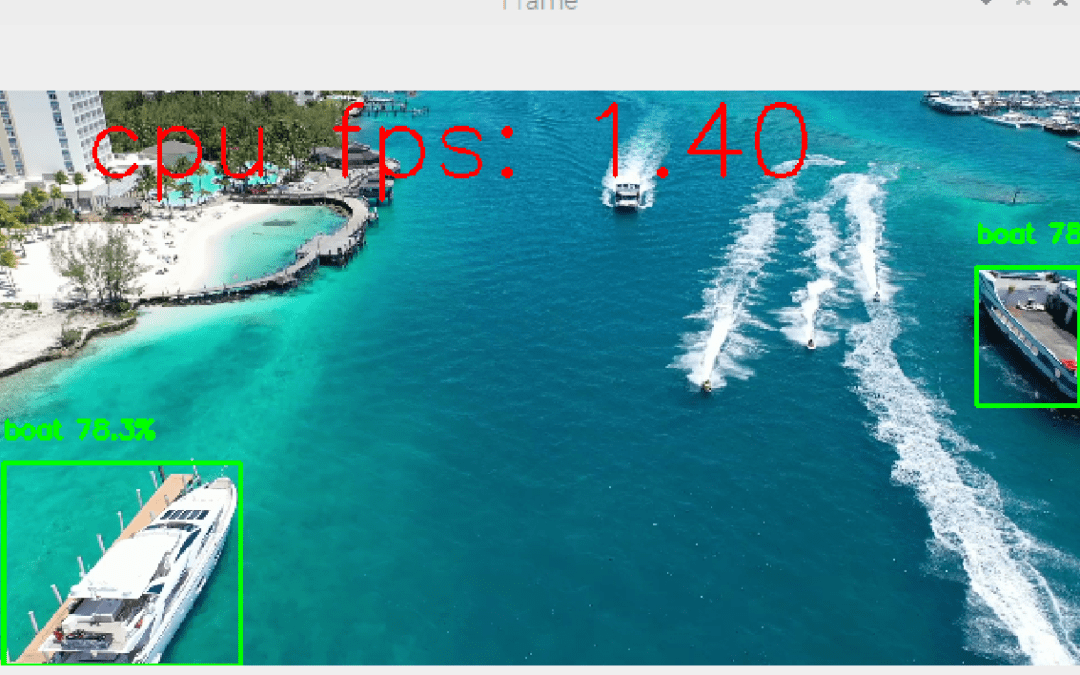

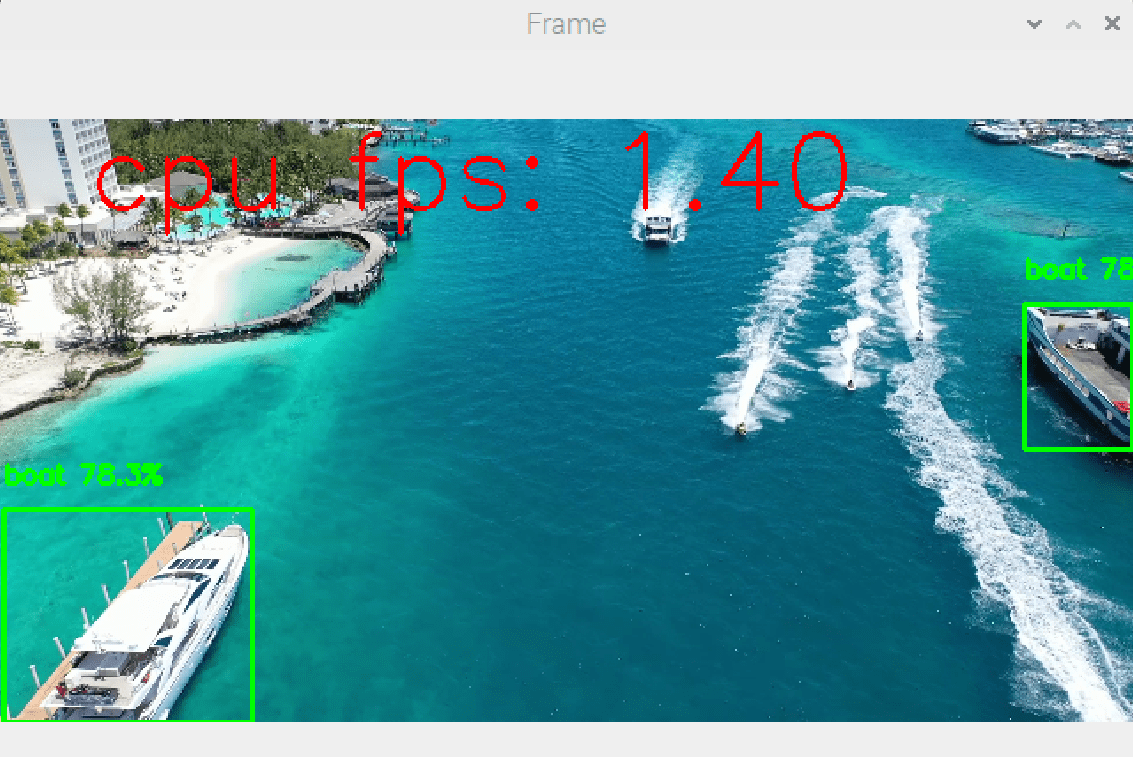

The results of this code show object detection at a speed of around one frame per second (1 FPS, compared with 10FPS for a computer).

Improved speed

Running an object recognition model on a Raspberry Pi is a real challenge. But there are ways of improving the results.

Our basic result is obtained using the yolov5nu model with detection at 1 frame per second (1 FPS).

Change card

If you’re looking for high-performance results, it’s best to turn to microcomputers designed to work with artificial intelligence. Among other things, these boards have microprocessors adapted to these calculation volumes (e.g. Nvidia Jetson).

Changing language

The code proposed here is written in Python, but there are also lite versions written in C++ that considerably increase execution speed.

Reduce the number of images processed

You can reduce the computational load by skipping images.

if img_counter % 5 == 0 :

detections = model(frame)[0]

img_counter+=1