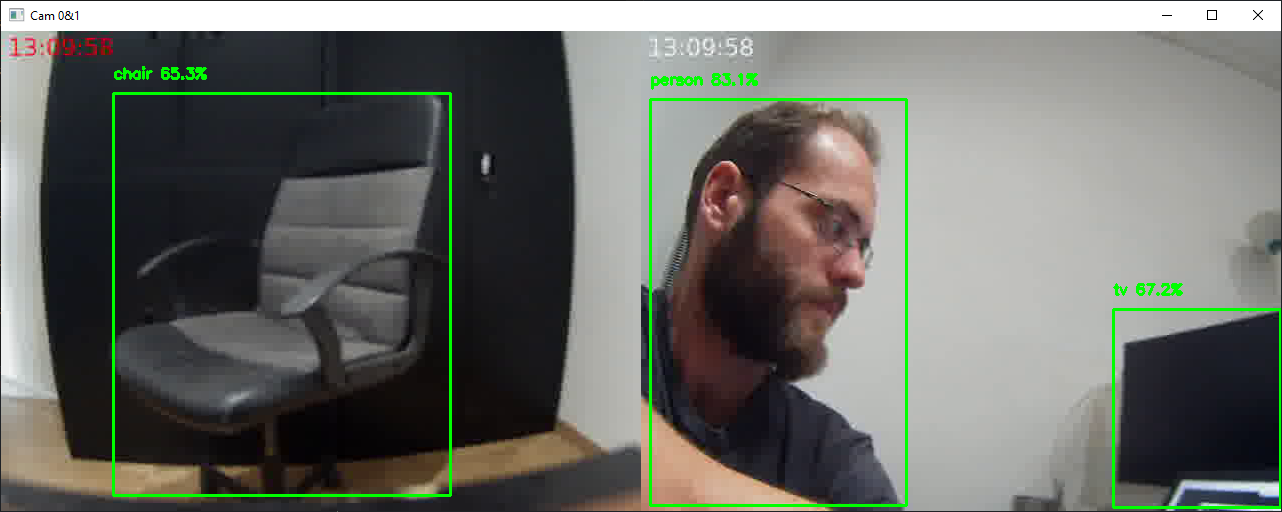

In this tutorial we’ll look at how to achieve synchronized video streams with Python and OpenCV. One of the problems of video streaming is to transmit and acquire high-quality video signals with as little delay as possible. The ability to synchronize video streams is to be able to process their data simultaneously, as in object recognition.

Prerequisites: Streaming video between two machines

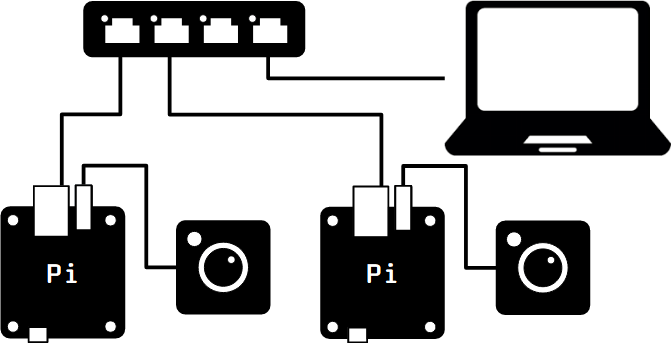

Hardware

- Computer with Python and OpenCV

- 2 video sources (file, stream, webcam, etc.)

- Internet or ethernet connection

For this tutorial, I’m using two Orange Pi Zero, which generates video streams from USB cameras (ArduCam), connected to the computer network via an Ethernet switch.

Streaming

To create the stream from the camera’s video stream, we use FFMPEG. We use the UDP protocol, in which we specify the computer’s IP address and the port on which the stream is located.

Video stream 0

ffmpeg -video_size 640x480 -i /dev/video0 -f mpegts udp://{ip_address}:8553?pkt_size=1316Video stream 1

ffmpeg -video_size 640x480 -i /dev/video0 -f mpegts udp://{ip_address}:8554?pkt_size=1316To test the video stream, you can use the ffplay command

ffplay upd://127.0.0.1:8553 #video streaming 0 ffplay upd://127.0.0.1:8554 #video streaming 1

In this tutorial, we’ll use the drawtext filter to add text to the video. This allows us to display the time and easily observe the delay.

-vf "drawtext=fontfile=/usr/share/fonts/truetype/ttf-dejavu/DejaVuSans-Bold.tff: text='%{{localtime\:%T}}': fontsize=24: fontcolor=white@0.8: x=7: y=7"N.B.: it is possible to put the ffmpeg command in a Python script.

Launching commands via SSH

For simplicity’s sake, we’ll run the ffmpeg commands from the Python script via SSH. To do this, we use the paramiko library

import socket

import paramiko

#computer ip address

hostname = socket.gethostname()

ip_address = socket.gethostbyname(hostname)

print(f"IP Address: {ip_address}")

#ip_address= "192.168.1.70"

ssh0 = paramiko.SSHClient()

ssh0.set_missing_host_key_policy(paramiko.AutoAddPolicy())

ssh1 = paramiko.SSHClient()

ssh1.set_missing_host_key_policy(paramiko.AutoAddPolicy())

ssh0.connect("192.168.1.32", username="root", password="root")

ssh1.connect("192.168.1.33", username="root", password="root")

#stream_cmd0 = "python3 video-stream.py {}:{}?pkt_size=1316".format(ip_address,8553)

#stream_cmd1 = "python3 video-stream.py {}:{}?pkt_size=1316".format(ip_address,8554)

stream_cmd0="""ffmpeg -video_size 640x480 -i /dev/video0 -vf "drawtext=fontfile=/usr/share/fonts/truetype/ttf-dejavu/DejaVuSans-Bold.tff: text='%{{localtime\:%T}}': fontsize=24: fontcolor=red@0.8: x=7: y=7" -f mpegts udp://{}:{}?pkt_size=1316""".format(ip_address,8553)

stream_cmd1="""ffmpeg -video_size 640x480 -i /dev/video0 -vf "drawtext=fontfile=/usr/share/fonts/truetype/ttf-dejavu/DejaVuSans-Bold.tff: text='%{{localtime\:%T}}': fontsize=24: fontcolor=white@0.8: x=7: y=7" -f mpegts udp://{}:{}?pkt_size=1316""".format(ip_address,8554)

ssh1_stdin, ssh1_stdout, ssh1_stderr = ssh1.exec_command(stream_cmd1)

ssh0_stdin, ssh0_stdout, ssh0_stderr = ssh0.exec_command(stream_cmd0)N.B.: don’t forget to close the ssh connection at the end of the program ssh0.close(), ssh1.close()

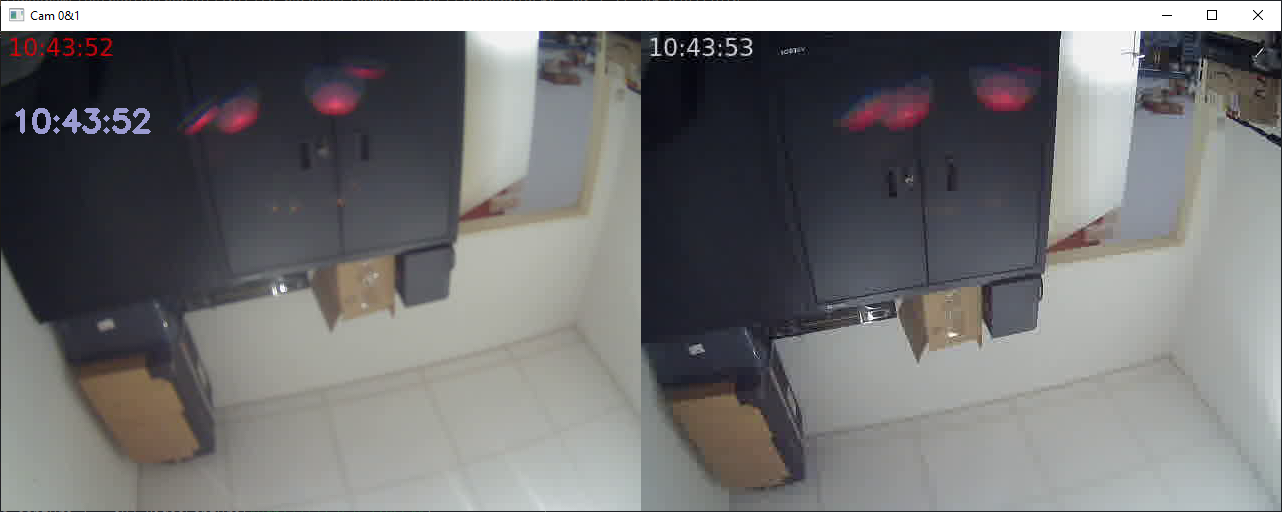

Receiving unsynchronized streams

To receive the video streams, we use OpenCV with Python.

First we open the two video streams, which we play back in a loop as long as they’re open.

cap0 = cv2.VideoCapture("udp://127.0.0.1:8553")

cap1 = cv2.VideoCapture("udp://127.0.0.1:8554")For practical reasons, we concatenate the image in the same window, making sure they have compatible dimensions.

frame0 =cv2.resize(frame0, (640,480)) frame1 =cv2.resize(frame1, (640,480)) Hori = np.concatenate((frame0, frame1), axis=1)

Finally, we display the computer’s local time for comparison.

Hori = cv2.putText(Hori, date_time,(10, 100),font, 1,(210, 155, 155), 4, cv2.LINE_4)

Here is the complete code for capturing non-synchronized video streams

N.B.: This code works once the ffmpeg commands have been run on each machine.

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import numpy as np

import cv2

import datetime

#<add code to run ffmpeg command via ssh>

cap0 = cv2.VideoCapture("udp://127.0.0.1:8553")

cap1 = cv2.VideoCapture("udp://127.0.0.1:8554")

while cap0.isOpened() and cap1.isOpened():

# Capture frame-by-frame

ret0, frame0 = cap0.read()

ret1, frame1 = cap1.read()

# Get current date and time

now=datetime.datetime.now()

date_time = now.strftime("%H:%M:%S")

font = cv2.FONT_HERSHEY_SIMPLEX

# write the date time in the video frame

#frame0 = cv2.putText(frame0, date_time,(10, 100),font, 1,(210, 155, 155), 4, cv2.LINE_4)

#frame1 = cv2.putText(frame1, date_time,(10, 100),font, 1,(210, 155, 155), 4, cv2.LINE_4)

#if (ret0):

# # Display the resulting frame

# cv2.imshow('Cam 0', frame0)

#if (ret1):

# # Display the resulting frame

# cv2.imshow('Cam 1', frame1)

if (ret0 and ret1):

frame0 =cv2.resize(frame0, (640,480))

frame1 =cv2.resize(frame1, (640,480))

Hori = np.concatenate((frame0, frame1), axis=1)

Hori = cv2.putText(Hori, date_time,(10, 100),font, 1,(210, 155, 155), 4, cv2.LINE_4)

cv2.imshow('Cam 0&1', Hori)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

#release captures

cap0.release()

cap1.release()

cv2.destroyAllWindows()

We observe a delay of one second between the two video streams. This is not acceptable if we wish to process the images synchronously.

Stream capture with Multithreading

The simplest solution is to dedicate threads to image capture. To do this, we use the threading package. We’ll create a VideoStream class to manage stream playback in its own thread

class VideoStream: def __init__(self, src=0): self.cap = cv2.VideoCapture(src) self.ret, self.frame = self.cap.read() self.started = False self.read_lock = Lock() def start(self): if self.started: return None self.started = True self.thread = Thread(target=self.update, args=()) self.thread.start() return self def update(self): while self.started: ret,frame = self.cap.read() self.read_lock.acquire() self.ret, self.frame = ret,frame self.read_lock.release() def isOpened(self): return self.cap.isOpened() def read(self): self.read_lock.acquire() ret = self.ret frame = self.frame.copy() self.read_lock.release() return ret, frame def release(self): self.started = False self.thread.join() def __exit__(self, exc_type, exc_value, traceback): self.cap.release()

We can then instantiate our two objects cap0 and cap1

cap0 = VideoStream("udp://127.0.0.1:8553").start()

cap1 = VideoStream("udp://127.0.0.1:8554").start()

while cap0.isOpened() and cap1.isOpened():

ret0, frame0 = cap0.read()

ret1, frame1 = cap1.read()

# Get current date and time

#date_time = str(datetime.datetime.now())

now=datetime.datetime.now()

date_time = now.strftime("%H:%M:%S")

font = cv2.FONT_HERSHEY_SIMPLEX

if ret0 and ret1:

frame0 =cv2.resize(frame0, (640,480))

frame1 =cv2.resize(frame1, (640,480))

Hori = np.concatenate((frame0, frame1), axis=1)

Hori = cv2.putText(Hori, date_time,(10, 100),font, 1,(210, 155, 155), 4, cv2.LINE_4)

cv2.imshow('Cam 0&1', Hori)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

#release capture

cap0.release()

cap1.release()

cv2.destroyAllWindows()

The two video streams are now synchronized, and it is possible to save them to a video file or perform image processing on both streams at the same time.

Full code for receiving synchronized video streams

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# pip install opencv-python

# pip install paramiko

import numpy as np

import cv2

import paramiko

import time

import datetime

from threading import Thread, Lock

#computer ip address

import socket

hostname = socket.gethostname()

ip_address = socket.gethostbyname(hostname)

print(f"IP Address: {ip_address}")

#ip_address= "192.168.1.70"

ssh0 = paramiko.SSHClient()

ssh0.set_missing_host_key_policy(paramiko.AutoAddPolicy())

ssh0.connect("192.168.1.32", username="root", password="root")

ssh1 = paramiko.SSHClient()

ssh1.set_missing_host_key_policy(paramiko.AutoAddPolicy())

ssh1.connect("192.168.1.33", username="root", password="root")

#python

#stream_cmd0 = "python3 video-stream.py {}:{}?pkt_size=1316".format(ip_address,8553)

#stream_cmd1 = "python3 video-stream.py {}:{}?pkt_size=1316".format(ip_address,8554)

#ffmpeg

stream_cmd0="""ffmpeg -video_size 640x480 -i /dev/video0 -vf "drawtext=fontfile=/usr/share/fonts/truetype/ttf-dejavu/DejaVuSans-Bold.tff: text='%{{localtime\:%T}}': fontsize=24: fontcolor=red@0.8: x=7: y=7" -f mpegts udp://{}:{}?pkt_size=1316""".format(ip_address,8553)

stream_cmd1="""ffmpeg -video_size 640x480 -i /dev/video0 -vf "drawtext=fontfile=/usr/share/fonts/truetype/ttf-dejavu/DejaVuSans-Bold.tff: text='%{{localtime\:%T}}': fontsize=24: fontcolor=white@0.8: x=7: y=7" -f mpegts udp://{}:{}?pkt_size=1316""".format(ip_address,8554)

#manual

#ffmpeg -video_size 640x480 -i /dev/video0 -f mpegts udp://192.168.1.70:8553?pkt_size=1316

#ffmpeg -video_size 640x480 -i /dev/video0 -f mpegts udp://192.168.1.70:8554?pkt_size=1316

ssh1_stdin, ssh1_stdout, ssh1_stderr = ssh1.exec_command(stream_cmd1)

ssh0_stdin, ssh0_stdout, ssh0_stderr = ssh0.exec_command(stream_cmd0)

#print(ssh0_stdout.read().decode())

#print(ssh1_stdout.read().decode())

class VideoStream:

def __init__(self, src=0):

self.cap = cv2.VideoCapture(src)

self.ret, self.frame = self.cap.read()

self.started = False

self.read_lock = Lock()

def start(self):

if self.started:

return None

self.started = True

self.thread = Thread(target=self.update, args=())

self.thread.start()

return self

def update(self):

while self.started:

ret,frame = self.cap.read()

self.read_lock.acquire()

self.ret, self.frame = ret,frame

self.read_lock.release()

def isOpened(self):

return self.cap.isOpened()

def read(self):

self.read_lock.acquire()

ret = self.ret

frame = self.frame.copy()

self.read_lock.release()

return ret, frame

def release(self):

self.started = False

self.thread.join()

def __exit__(self, exc_type, exc_value, traceback):

self.cap.release()

print("waiting for response...")

#cap0 = cv2.VideoCapture("udp://127.0.0.1:8553")

#cap1 = cv2.VideoCapture("udp://127.0.0.1:8554")

cap0 = VideoStream("udp://127.0.0.1:8553").start()

cap1 = VideoStream("udp://127.0.0.1:8554").start()

while cap0.isOpened() and cap1.isOpened():

ret0, frame0 = cap0.read()

ret1, frame1 = cap1.read()

# Get current date and time

#date_time = str(datetime.datetime.now())

now=datetime.datetime.now()

date_time = now.strftime("%H:%M:%S")

font = cv2.FONT_HERSHEY_SIMPLEX

if ret0 and ret1:

frame0 =cv2.resize(frame0, (640,480))

frame1 =cv2.resize(frame1, (640,480))

Hori = np.concatenate((frame0, frame1), axis=1)

Hori = cv2.putText(Hori, date_time,(10, 100),font, 1,(210, 155, 155), 4, cv2.LINE_4)

cv2.imshow('Cam 0&1', Hori)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

#release capture

cap0.release()

cap1.release()

cv2.destroyAllWindows()

#kill ffmpeg

ssh1_stdin, ssh1_stdout, ssh1_stderr = ssh1.exec_command("killall ffmpeg")

ssh0_stdin, ssh0_stdout, ssh0_stderr = ssh0.exec_command("killall ffmpeg")

#close ssh

ssh0.close()

ssh1.close()

Applications

- CCTV surveillance camera network

- Object recognition on synchronized video streams